How to run experimentation in B2B sales led environment - 3x growth in 12 months - a Contractbook case study

How to run experiments if you are limited on traffic - a case on how to have meaningful results.

Today I’m talking with Kamil Jakubczyk from Contractbook, who leads Growth Marketing Team.

A case we are discussing today is a wonderful example of how to approach experimentation in B2B sales-led SaaS.

The problem that most B2B companies have is relatively low traffic and even fewer conversions. That's not a perfect environment for running experiments, because to run them efficiently, it's best to have hundreds of thousands of sessions a month.

When Kamil joined Contractbook in January 2022, he realized that marketing was lagging behind the product - the website was outdated and didn't tell the full product story, it lacked current product capabilities, and in general, there was no strong USP.

But that wasn't the biggest problem Contractbook had with commercial motion - it was the conversion rate. The current 0.3% (from visitor to SQL) was a clear signal that they were missing a lot of opportunities.

I knew that we could do much better. We had a lot of external benchmarks, identified gaps and strong signals from customers that were saying clearly - we are not showing enough value. And so, we decided to fix that.

And so he did, Kamil and the team introduced an experimentation program that allowed them to increase the conversion rate over 3x in 12 months. But before we get into that, let's provide some context first.

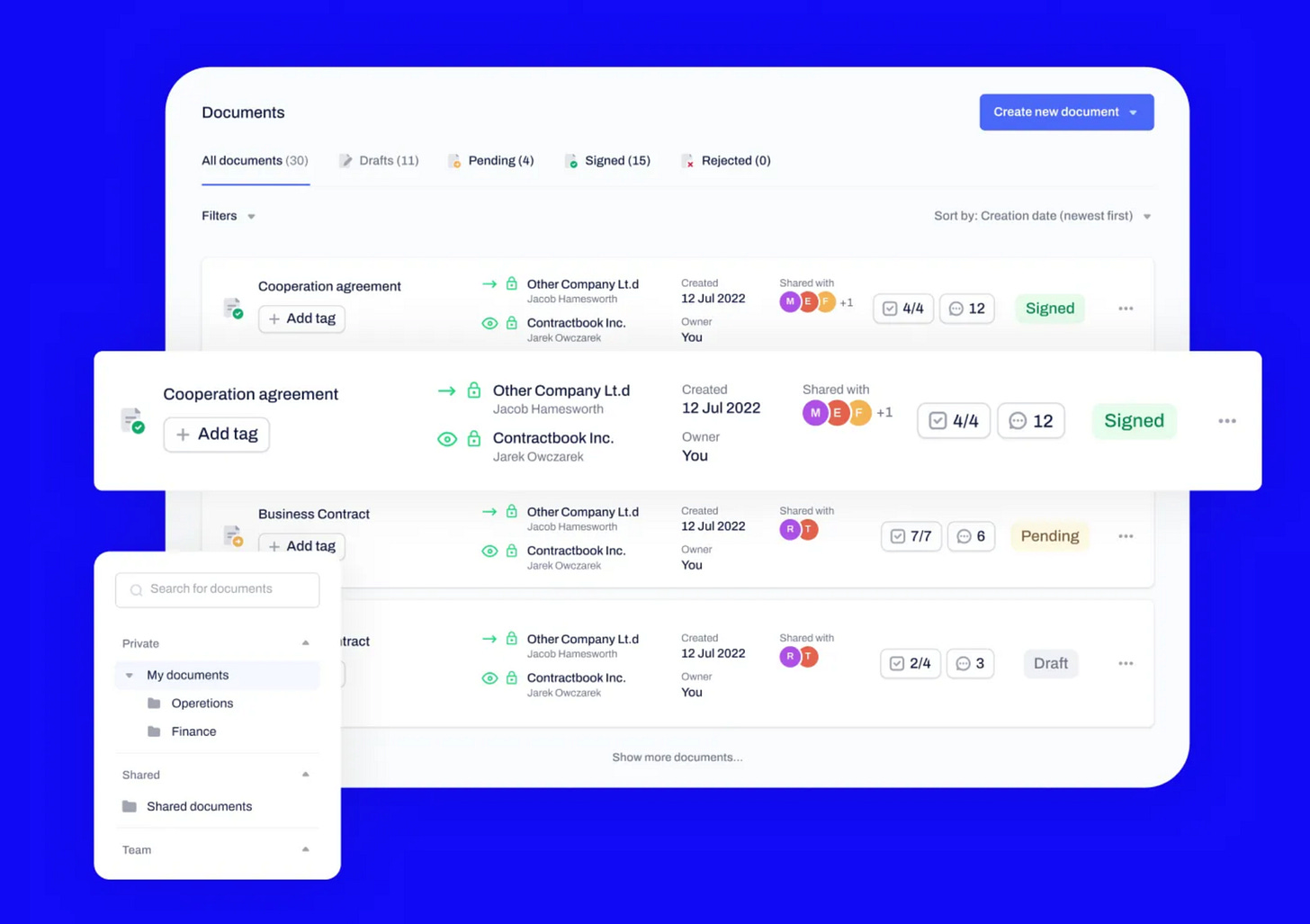

What is Contractbook

Contractbook is a series B $30M (and $40M+ in total funding) company that deals with the contract management burden.

In short, they centralize the work with contracts within the organization and support all departments that deal with contracts, such as operations, accounting, legal, or finance.

Old Way: Each team within the company has its own processes for contract flow. Best case scenario: written process somewhere in the docs with shared Google Drive. Worst case: no processes at all, everything is done by email and manual work.

New Way: Entire contract workflow from organization, signature collection, collaboration, status tracking and automation in one tool with every department on board.

Growth Motion

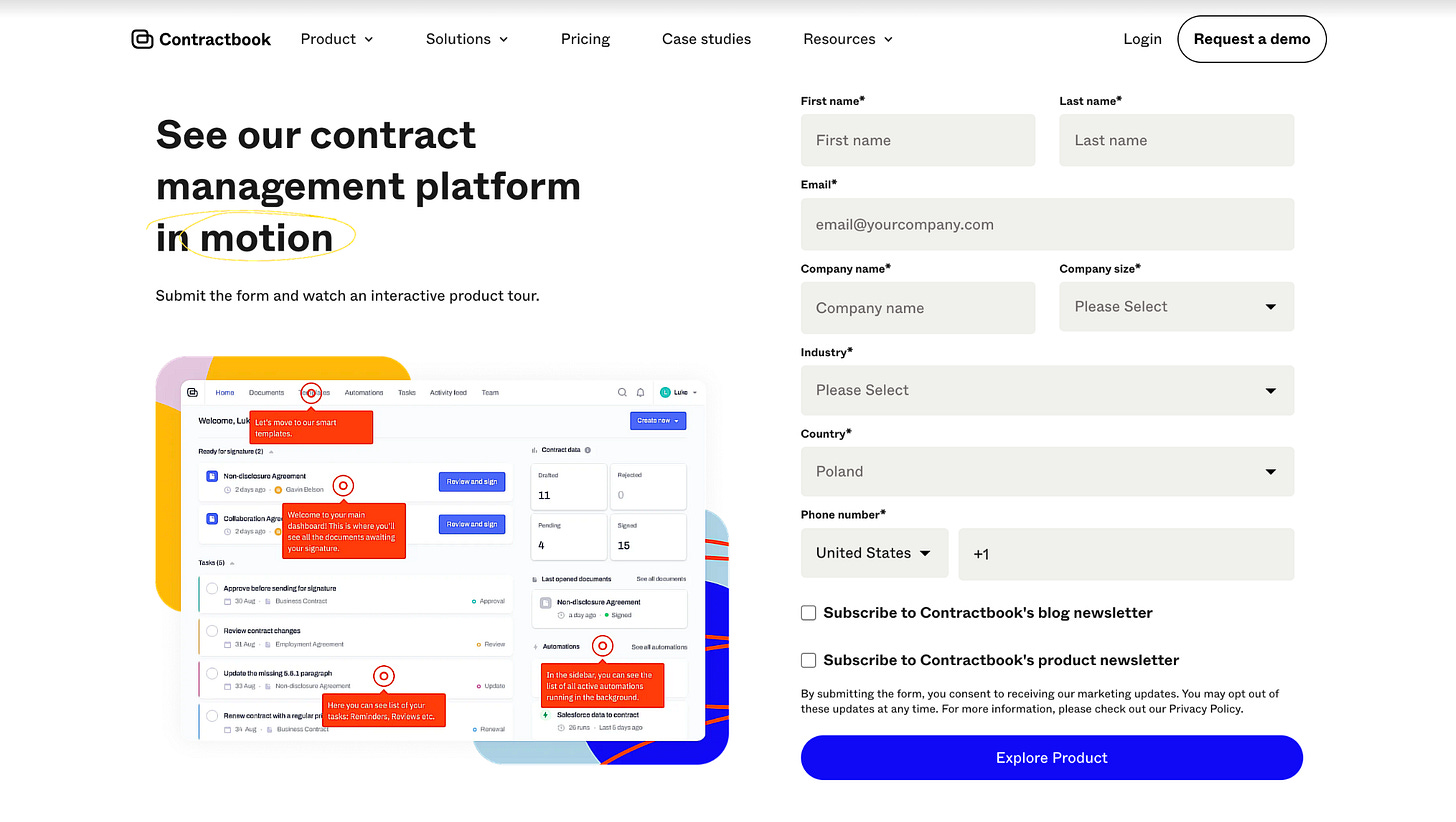

Contractbook has a traditional high-touch, sales-driven monetization funnel with high friction. No freemium, no trial. Potential customers have to go through a demo with sales to experience the product (top to bottom adoption).

To feed sales with leads, they rely heavily on paid acquisition through search ads and Capterra.

The problem that the company was facing was a poor conversion from visitor to SQL - around 0.3%.

My main job was to get more leads, but I realized that adding more to the top of the funnel will not be a big leverage as I’ll lost most of the traffic in the process. I knew I had to optimize the conversion, but the problem was - a low traffic. So I had to find a way to experiment on the funnel to optimize it, but waiting a few months for a statistical significance was not an option.

For those of you who are new to experimentation, achieving statistical significance is crucial because it allows you to have a level of confidence that your results are real, reliable, and not due to chance. Otherwise, the changes could have the opposite effect of what you intended.

Key question: How to run tests if reaching statistical significance is just too long.

Experimentation - Bayesian to the Rescue

This problem is not unique to Contractbook. Many B2B companies struggle to achieve statistically significant results due to limited traffic and a relatively low conversion rate.

So, is there a solution?

Let me quickly introduce you to statistical methods of evaluating test results to give you a better context of what we are dealing with.

We're taking a look at two methods - more common (Frequentist) and less common (Bayesian)

In simplified terms, frequentist statistics gives you a pretty straightforward answer of either win/lose/inconclusive - under some assumptions including confidence interval and statistical power.

It’s ideal for large-scale experimentation programs because it's one of the most accurate statistical tools out there. It also allows for rigorous control at the experimentation program level and gives you very sophisticated tools for understanding the big picture - once hundreds of tests have been run.

The main drawback of this method is that it often requires a non-trivial sample size to detect the effect in a reasonable timeframe. This is especially challenging in the B2B space, where both volumes and conversion rates (another important factor in assessing statistical significance) tend to be lower.

How to deal with this? One of the solutions is to use a Bayesian method - it can help to significantly reduce the required sample size.

Instead of either a 0 or 1 answer (hit or miss), the Bayesian approach focuses on a more probabilistic outcome.

Instead of saying: Original won (under our experimentation program’s assumptions) - we say - here’s an estimated change, and here’s the overall probability that the challenger variant is better. This turns the whole situation into a business decision, which leaves more room for interpretation than under the frequentist approach.

Here’s a sample a/b test outcome that Bayesian statistics produces:

During a test, the challenger variant has generated an estimated 13.2% uplift in conversion rate, with a 94% overall chance of delivering a better performance than the original.

While imperfect and not always straightforward, it greatly expands the pool of companies that can harness the power of experimentation.

And this is Kamil's approach when it comes to improving the conversion rate for Contractbook.

The business problem - Low problem awareness, no solution intent

To understand why users did not want to convert, Kamil and the team collected qualitative and quantitative data. User journey drop-off analysis, customer interviews, and sales feedback pointed to a clear problem definition.

Feedback from the sales and all of the data points was clear: Users who schedule a demo, have no idea what the product does and how they can benefit from it.

There was a poor problem-solution awareness, people who were dealing with contracts have not ever imagined that the whole process can be more efficient. Hence we had a low intent traffic - they had not buying intention and sales couldn’t push them down the funnel.

In addition, the current sales-led motion created a huge friction. It required users to make a strong commitment - sharing a lot of personal data and spending time on a call, without understanding the value they could achieve.

Why it was painful?

For Growth - Poor conversion.

For Sales - instead of selling, they had to spend time on educating people.

For Marketing - It’s hard to educate people on how, this quite complicated and nuanced process, can be more efficient if the users can’t access the product.

Solution - have a cookie and eat a cookie

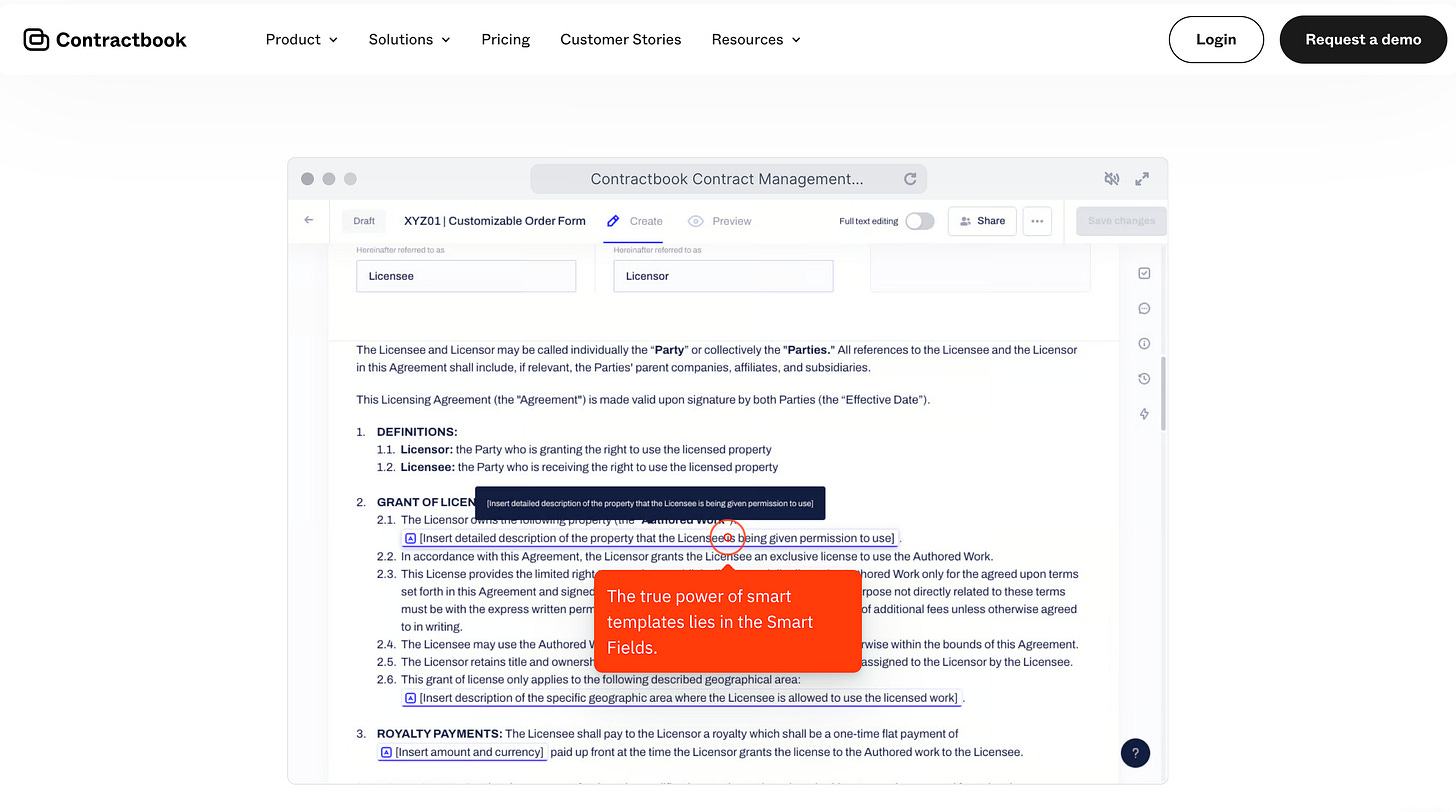

The team started to formulate a solution around the idea of allowing users to experience the product without actually accessing it (so it's not cannibalizing a sales funnel) - an interactive demo.

The new demo still requires users to fill out the form (which allows leads to enter the sales pipeline and nurture them), but the friction was greatly reduced. It requires much less commitment from the potential buyer to test a demo on their own versus talking to a salesperson. Interactive demo allowed yet-unconvinced-to-buy users to play with the product and build their own assessment (or, to build solution awareness, if you prefer marketing terms).

On top of that, the team wanted to verify if adding a 2nd Call-to-Action could improve conversion. That might be counterintuitive, as we are taught to reduce the number of CTAs in a product, but in behavioral psychology, there is a concept called single-option aversion that says we are reluctant to pick an option - even the one we like - when no others are being offered.

For MVP, they decided to use one of the tools they were already using internally, Walnut to do the heavy lifting and create the product tour.

The team started to execute on the idea - they created a product tour, crafted a new conversion page with a new form, and set up the CRM automation.

Experimentation begin

The primary metric to follow was a conversion rate from a website visitor to SQL and the trade-off metric was a conversion from visitor to call-to-action click, to make sure that additional CTA doesn’t distract users from the primary goal.

(Trade-off metric is the metric whose decrease reflects a counter-hypothesis, which can show what may go wrong with the test. In other words, it is the metric that you supervise to ensure that you do not harm the user's experience).

First, the team launched the experiment on the homepage that had the biggest traffic and that was bringing the highest number of conversions, as it allowed them to have results faster. After receiving successful results, the team built enough confidence to continue with the same solution on the PPC landing page. It was important to do it in this order as the landing page for paid traffic had lower traffic and it was expensive - if the test were to become a failure, it would be painful.

Fortunately both tests were positive and have built a strong confidence in the proposed solution.

What was the tests results?

Primary metric (conversion from visitor to SQL) went up by 23.29% and according to Bayesian method it had a 99% chance to beat the original version.

Surprisingly, the trade-off metric also went up by 4.94% (though it was statistically insignificant). Despite the team having the hypothesis of single option aversion, it was still counterintuitive to think that users having more options will be more likely to choose any of them. The team was considering that it could be a distraction, which was proved not to be true.

The team stayed with the new solution, because it also had an interesting positive side-effects.

Side-effects

One of the most interesting part of experimentation is that many of the tests bring some unexpected side effects, or, as in this case, new opportunities. Contractbook relies strongly on paid acquisition, and this experiment had an interesting positive side effect for them.

By launching the 2nd user journey (secondary funnel), they have increased the total number of meaningful conversions. Today, paid platform algorithms rely a lot on the data you send them to pick the right target. A general rule of thumb is the more data you can send to the ad platform, the better audience optimization it has (selecting users that are more likely to convert).

It also helped to increase the solution ( & brand ) awareness as more people (those who were undecided) had a chance to form their opinion about what Contractbook offers - more than 10% of all deals start in this journey, and at least 16% of deals are influenced by the interactive demo in their buying process. It led to the last side effect, which is a growing pool of users in a certain problem that there is now a new growing pool of users in a certain stage of the funnel. That created an opportunity for further lead nurturing.

What was interesting, this new pool of users was big enough to open up for us a path for a very successful and ROI positive remarketing campaigns that push them straight to the sales demo.

Experimentation mindset that Kamil injected into company, the conversion has tripled within 12 months. It wasn't obviously the only test there, (there were more than 20) but this one proved that there is space for experiments in Contractbook.

When to run tests in B2B - my thoughts.

Obviously, not every company should be running experiments. Sometimes “Experimentation” is considered to be a holy grail by all “Growth” people out there. What it actually is, is the tool to make decisions that can work in a certain environment.

A/B Tests can also be expensive in terms of resources (team time), money (if including paid traffic) but also in terms of time, as they can delay the decision. There are 3 key factors to consider when you consider running an experiment:

Is there a potential downside to a key business metric of implementing a project? If your confidence is not high or there are known unknowns, you could consider running a test, as you don’t want to hurt your most important source of deals.

Is the area, in which you want to run an experiment, meaningful for business? If not, just ship it. You don’t want to delay the decision to launch the project by waiting for statistical significance, implementing the test, then cleaning it up, etc.

Do you have enough traffic to have a results quicker? The less traffic and conversions, the more time you need to reach significance. There are ways to shorten the time: you can use Bayesian methodology, you can release large-scale-effect tests, but if your traffic is low, the time will be significant. Waiting a whole quarter to make a decision, rarely makes sense.

To expand on the last point, here is a quote from Ronny Kohavi (ex-Airbnb, Microsoft, Amazon) in an interview from Lenny Rachitsky:

And one of the things I show there is that unless you have at least tens of thousands of users, the math, the statistics just don't work out for most of the metrics that you're interested in. In fact, I gave an actual practical number of a retail site with some conversion rate, trying to detect changes that are at least 5% beneficial, which is something that startups should focus on. They shouldn't focus on the 1%, they should focus on the 5 and 10%. Then you need something like 200,000 users. So start experimenting when you're in the tens of thousands of users. You'll only be able to detect large effects. And then once you get to 200,000 users, then the magic starts happening. Then you can start testing a lot more.

Full interview is linked at the end.

Key Takeaway & Reflections

Building interactive demo is a good idea to lower an entry barrier for customers in a B2B companies that build innovative products that have to deal with low awareness or high product complexity.

It’s also a great opening for sales-led companies to verify if the product is mature enough to open up to a product-led approach or if it’s worth investing in it.

You don’t have to reinvent the wheel. The team took the inspiration from Walnut rather than brainstorming and building their own ideas from scratch - get inspired and test it

Bayesian approach allows you to reduce the sample size required to run an experiment, but the trade-off is “confidence” - you still have to make a business decision

Not everything should become an experiment. Tests are expensive in terms of resources but also in terms of delaying the decision. Before you decide to run a test you should consider:

Is there a potential downside to the key business metric of implementing that project?

Is the area in which we want to experiment, is meaningful for business? If not, just ship it.

Do you have enough traffic to get results quicker?

If you are reading this, you are either very kind or you really liked this episode. Either way, thank you!

I’m interested in hearing from you - if you have any feedback, please respond to this email or hit me up on LinkedIn.

P.S. If you have an interesting case you'd like to share - contact me.